5.DigitRecognition - SVM algorithm

SVM algorithm

1. SVM algorithm

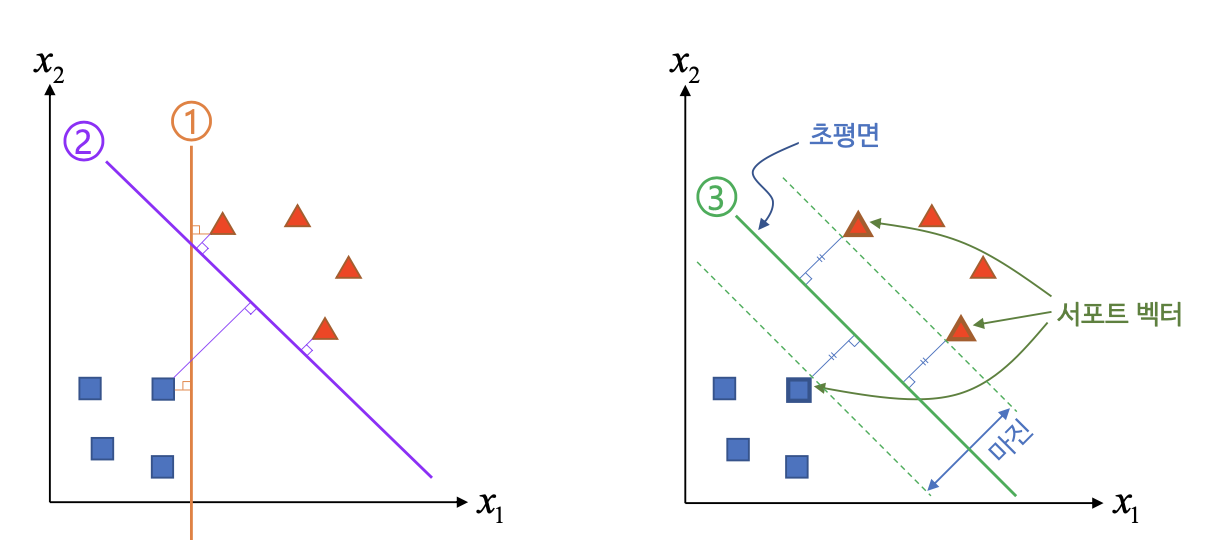

- What is support vector machine algorithm?

- The method that divide into data and furthest hyper plane, and then make tow group.

- Max margine hyper plane

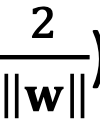

- hyper plane : w * x + b = 0

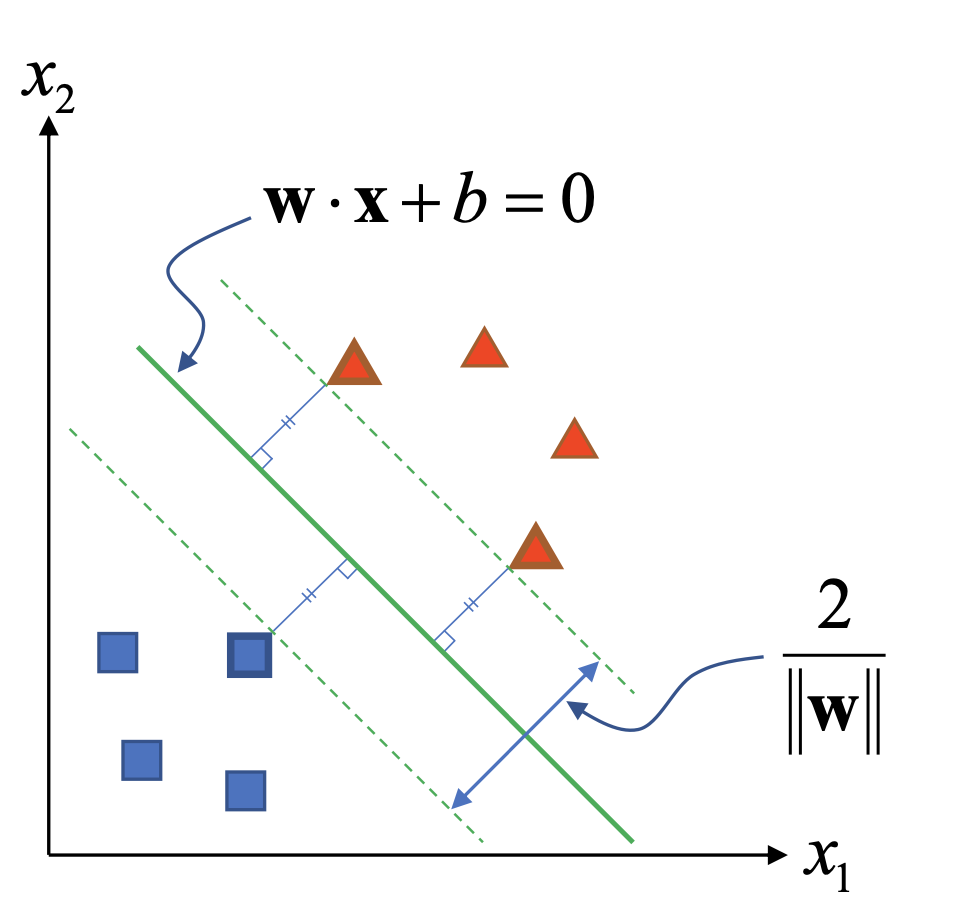

- To seek w which make max margin (

)

)

-

How to solve? -> define Constrainsts, Lagrange multiplier, KTT condition, etc

-

The solution -> The optimal solution for seperating the given data.

-

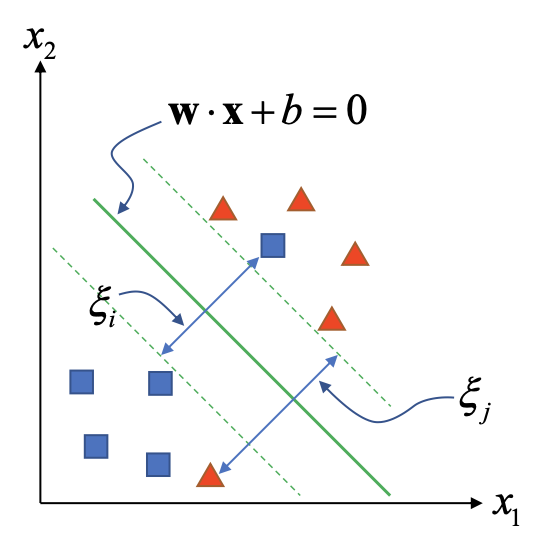

Allow misclassification

- If the given sample is couldn’t divided perfectly two group, Misclassification is allowed.

- C value is parameter set by user

- If C value is high, misclassification error will be low but margin is also to be low

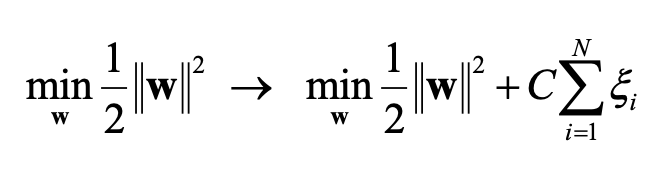

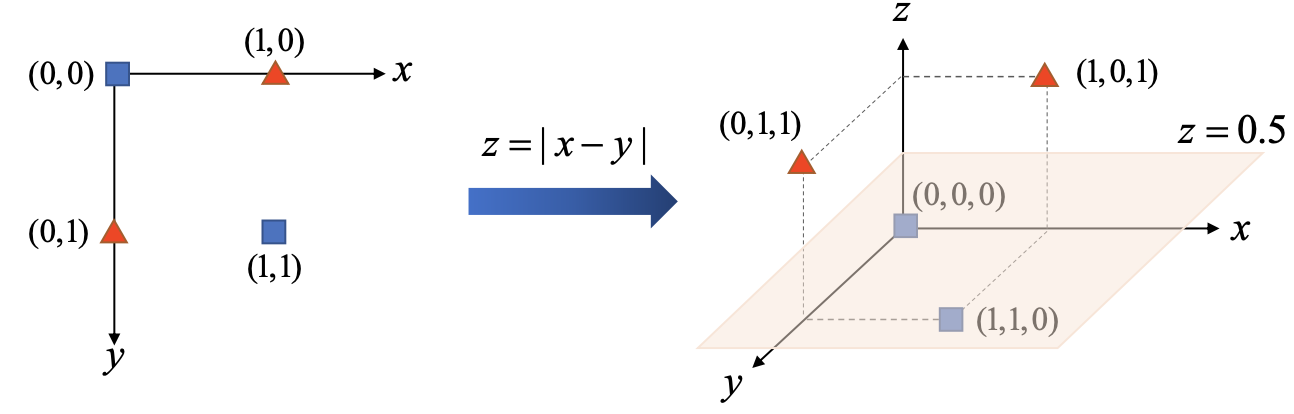

- Seperating non-linear data

- SVM is linear classification algorithm but real data are could distributed to non-linear.

- If dimension of non-linear data is expaned, it could seperated

- Example of dimension expand

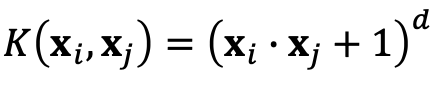

- kernel trick

- This method use the non-linear kernel function which replace dot product operation of vector in SVM hyper plane instead of using directly mapping function.

- Main kernel function

-Polynominal :

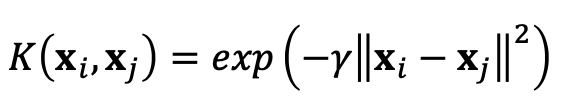

- Radial basis function :

- If gamma vlaue is high, it is very dependent on train data -> complex decision plane -> possiblity of overfitting will be high/

2. Use OpenCV SVM

- Genearate SVM object

cv2.ml.SVM_create() -> retval

- retval : cv2.ml_SVM object

- Set SVM type

cv.ml_SVM.setType(type) -> None

type : sort of SVM

- Set SVM kernel

cv.ml_SVM.setKernel(kernelType) -> None

- kernelType : type of kernel function

- SVM automatic trian( k-fold cross validation)

cv.ml_SVM.trainAuto(samples, layout, reponses, kFold=None, ...) -> retva;

- samples : train data matrix

- layout : train data set method

- responses : response vector to each train data

- kFold : part set number for cross validation

- retval : If train complete nomally, it is True

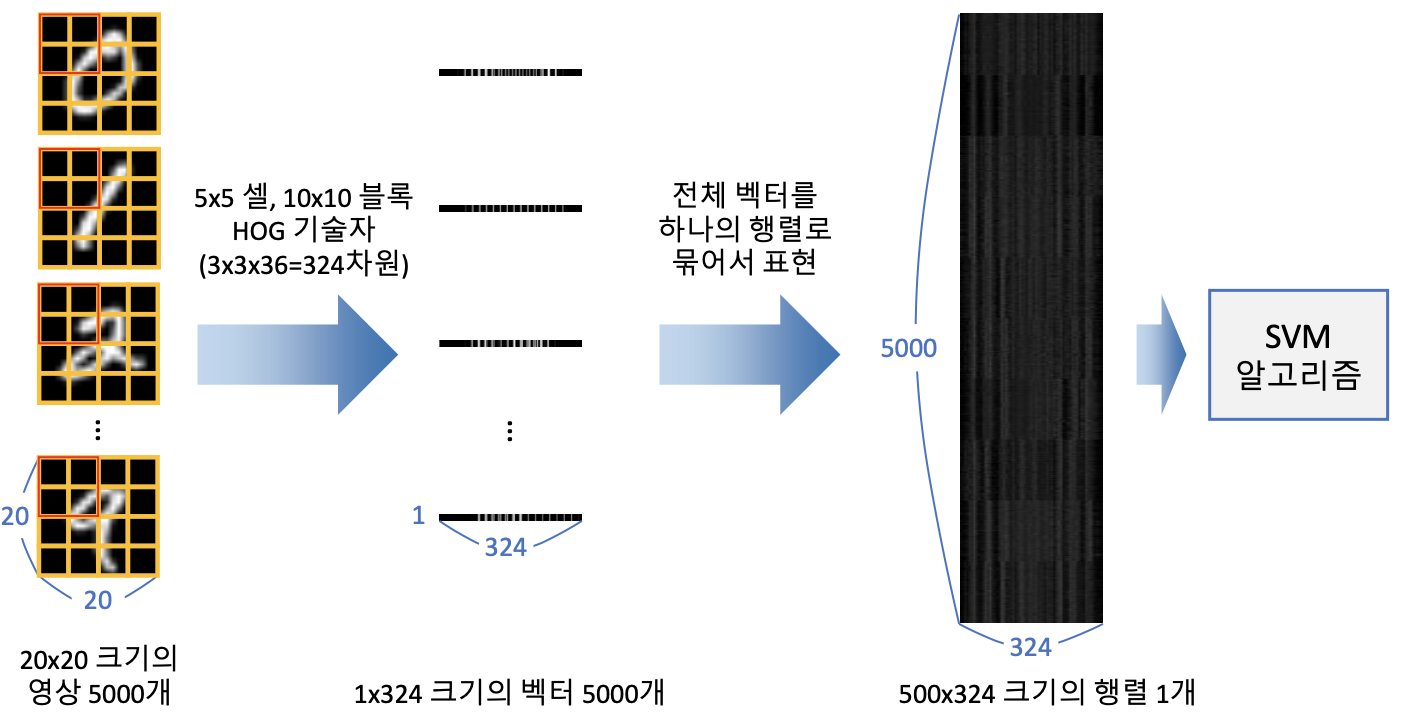

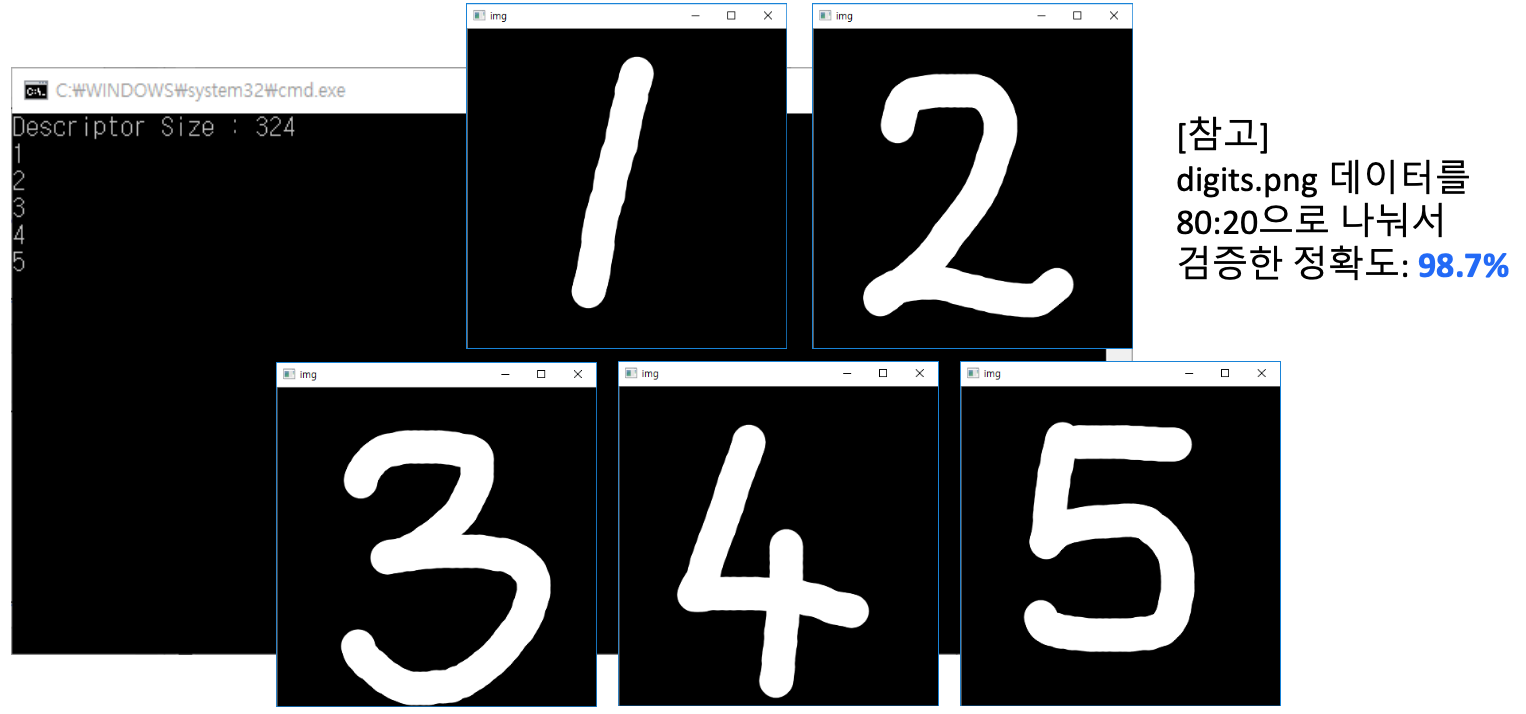

3. HOG & SVM digit recognition

- SVM trainning using for HOG feature vector

- HOG & SVM digit recognition example

Leave a comment